|

I'm a Data Scientist in the Ads team at Microsoft, currently working on text-to-image retrieval for ads in the Microsoft Audience Network. I obtained my Ph.D. in Computer Science from The City University of New York, where my research primarily focused on computer vision. My thesis was titled "Deep Learning-Based Human Action Understanding in Videos." During my graduate studies, I had the opportunity to collaborate with many talented individuals and conducted research on various topics such as visual scene understanding, time series analysis, video action detection, cross-modal object retrieval, and sign language understanding. Email / Google Scholar / GitHub / LinkedIn |

|

|

News:

|

|

Below is a summary of my research projects encompassing various areas of computer vision. These include Action Detection in Untrimmed Videos, Sign Language Understanding, Cross-Modal Retrieval, and Multi-camera Vehicle Tracking and Re-identification. |

|

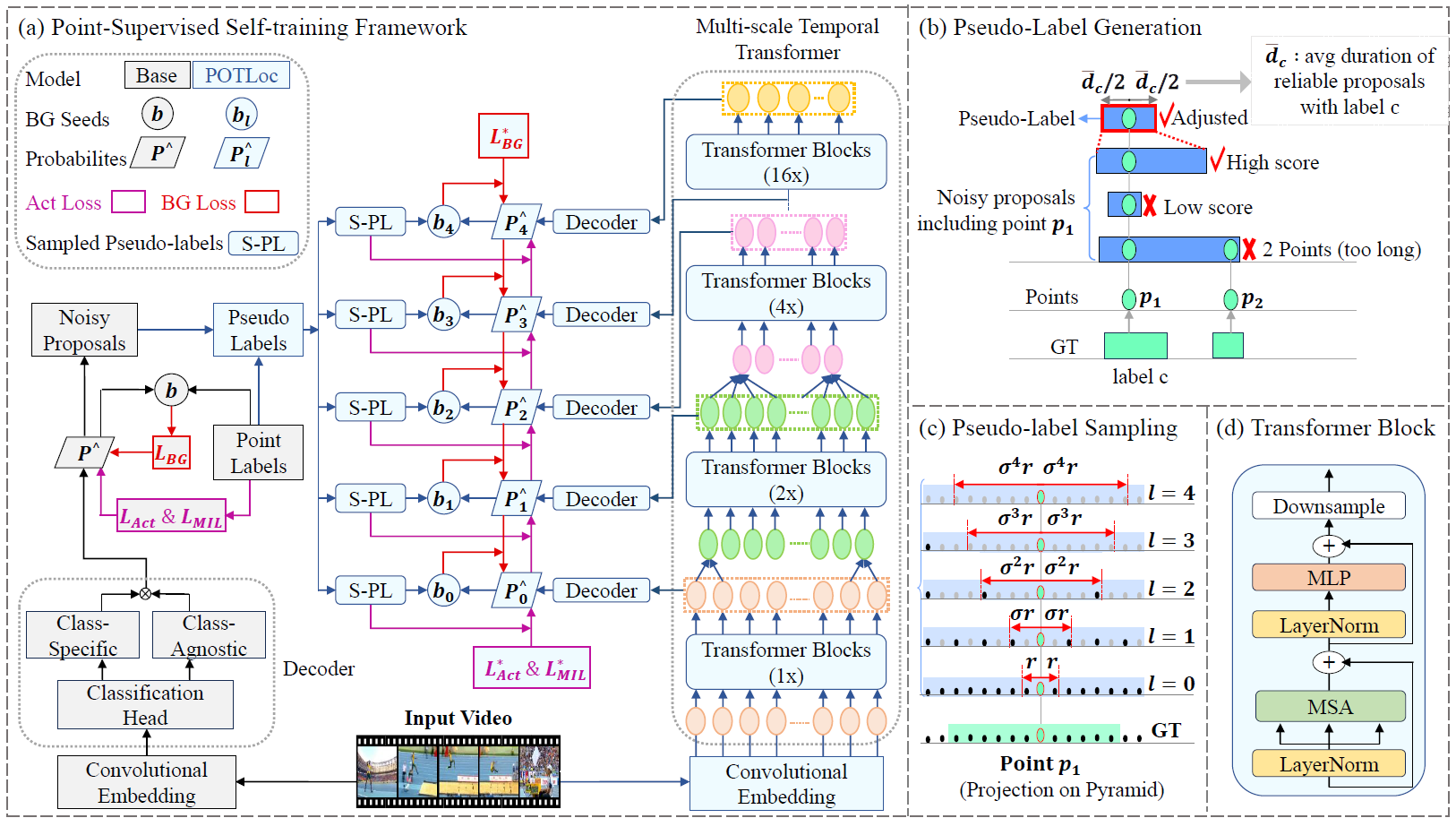

Elahe Vahdani, Yingli Tian CVIU , 2024 A Pseudo-label Oriented Transformer for weakly-supervised Action Localization utilizing only point-level annotation. |

|

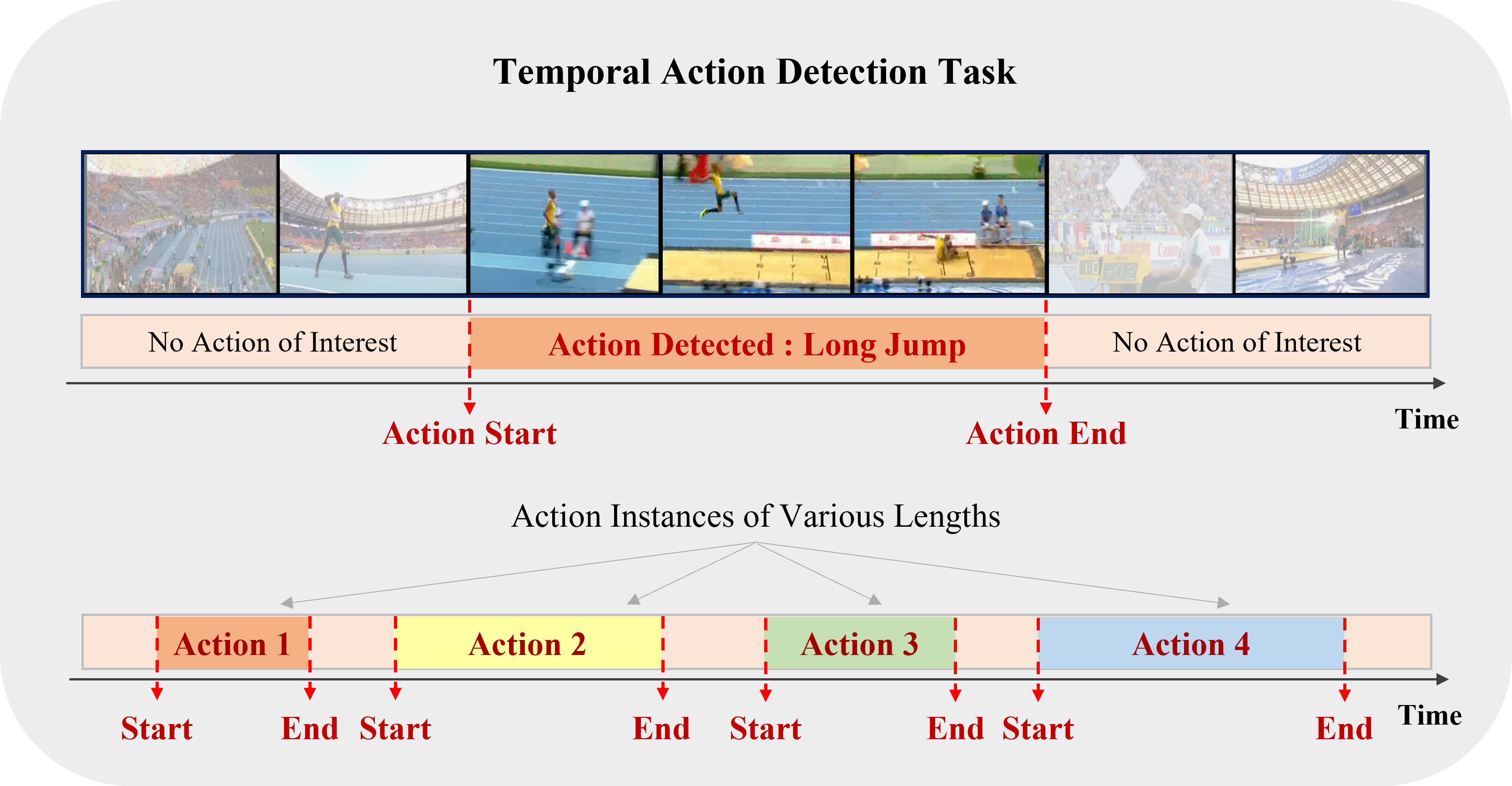

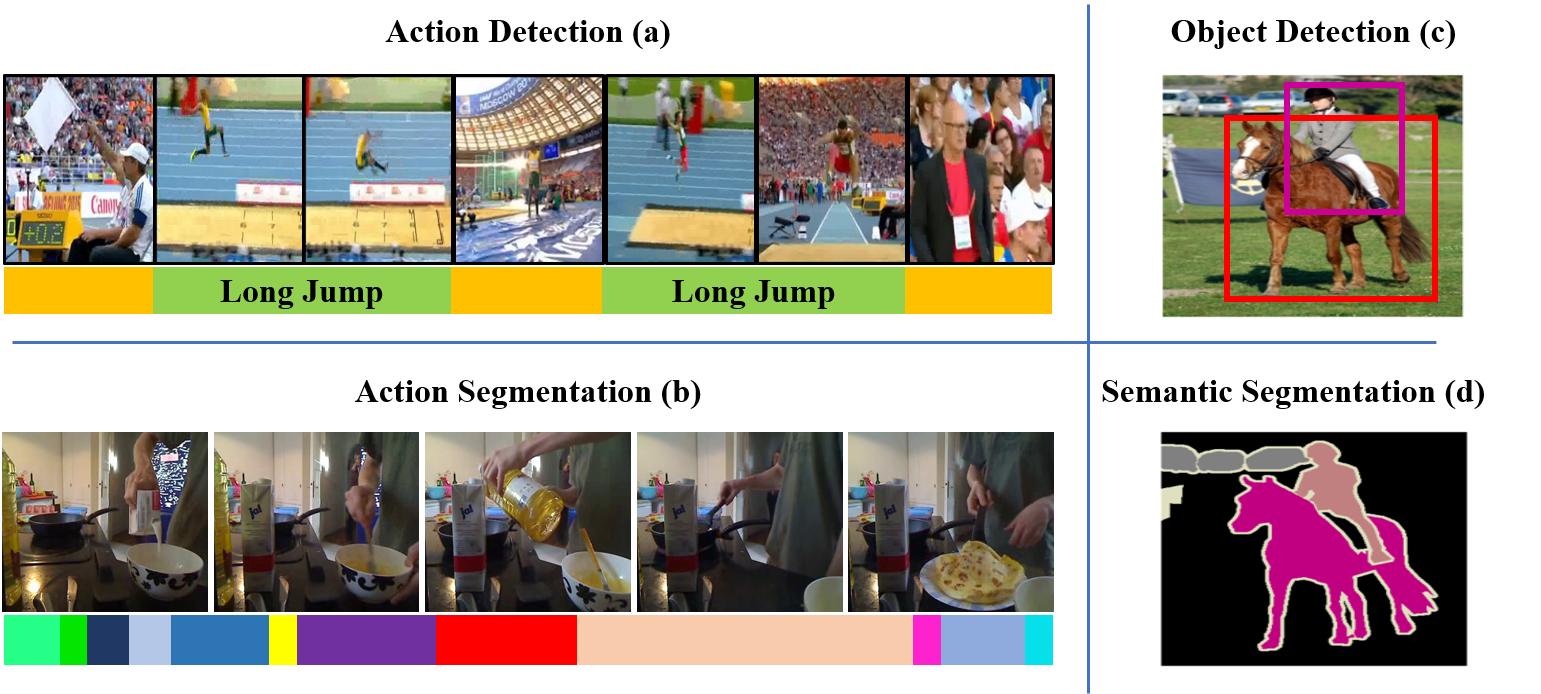

Elahe Vahdani, Yingli Tian TPAMI , 2022 An extensive overview of deep learning-based algorithms to tackle temporal action detection in untrimmed videos with different supervision levels. |

|

Longlong Jing*, Elahe Vahdani*, Jiaxing Tan, Yingli Tian CVPR , 2021 A novel cross-modal framework, designed to map representations from various modalities — such as images, mesh, and point-cloud — into a unified feature space. |

|

Elahe Vahdani, Longlong Jing, Yingli Tian, Matt Huenerfaut ICPR , 2020 We developed an educational tool that enables sign language students to automatically process their signing video assignments and receive immediate feedback on their fluency. This tool utilizes deep learning algorithms for the detection of grammatically important elements in continuous signing videos. |

|

Elahe Vahdani, Longlong Jing, Yingli Tian, Matt Huenerfaut IJAIR , 2023 PDF / Project Page / Dataset / A multi-modal, multi-channel framework for the real-time recognition of American Sign Language (ASL) signs from RGB-D videos. |

|

Saad Hassan, Larwan Berke, Elahe Vahdani, Longlong Jing, Yingli Tian, Matt Huenerfaut LREC , 2020 PDF/ Project Page / Dataset / We have collected a new dataset consisting of color and depth videos of fluent American Sign Language (ASL) signers performing sequences of 100 ASL signs from a Kinect v2 sensor. |

|

Yucheng Chen, Longlong Jing, Elahe Vahdani, Ling Zhang, Mingyi He, Yingli Tian CVPR AI City Workshop, 2019 PDF / Slides / Poster Our team's solutions for the image-based vehicle re-identification track and the multi-camera vehicle tracking track were featured in the AI City Challenge 2019. Our proposed framework significantly outperformed the current state-of-the-art vehicle ReID method, achieving a 16.3% improvement on the Veri dataset. |

|

Elahe Vahdani, Amotz Bar-Noy, Matthew P. Johnson, Tarek Abdelzaher IEEE SECON, 2017 An approximation algorithm for the NP-hard optimization problem of scheduling a set of n given jobs, each with specific deadlines, using a minimum number of channels in a sensor network. |

|

Hobbies:

|